SEO is an extensive concept; navigating it can be challenging and tedious. You have to understand and master a plethora of terminologies and algorithms. SEO includes various techniques and strategies, and technical SEO is one of them.

It requires technical expertise and knowledge, so many marketers and website owners avoid it. However, this means missing out on ample opportunities. Technical search engine optimization can lead to 117% ROI, which is much more than other types of marketing.

Therefore, it’s important to pay special attention to technical SEO. We are sharing an SEO technical audit checklist that will help you strengthen your overall SEO efforts.

What Is Technical SEO?

Technical SEO focuses on optimizing technical aspects of a website to enhance its performance and improve user experience. It improves a website’s infrastructure, boosting its search engine rankings and driving quality traffic.

Earlier, technical SEO was mainly about making crawling and indexing by search engine bots more effective. Crawling refers to the ability of search engines to access a web page, whereas indexing is the ability to assess a page and add it to the database.

However, a better SEO technical checklist now includes page speed, site architecture, SSL certificates, URL structure, navigation, security, duplicate content, user experience, and more.

SEO professionals audit and optimize the technical factors of a website to achieve a higher position in search results and improve its performance.

Upskill Yourself With Live Training (Book Free Class)

Why Is Technical SEO Important?

You can create the best website with appealing visuals and keyword-rich content. However, if its technical SEO is weak, it can hamper your SEO efforts and affect its ranking on SERPs. This is because search engines need to find and understand the content of a website to crawl and index it accurately, which is achieved through technical SEO.

Although other types of SEO are equally important, technical SEO sets the foundation for the performance and success of a website. It tells search engines about the content, sharing details, such as metadata, internal and external links, image description, etc., so search engines can crawl, understand, and index the web page.

Google is filled with web pages, and neglecting technical SEO can damage your search engine rankings. Technical SEO streamlines search engine processes, resulting in a surge in search engine ranking results and an increase in organic traffic. If you want to learn the technical aspects of optimizing a website and improving its search engine rankings, take our online SEO course that covers basic and advanced SEO topics in detail.

Also Read: Search Engine Optimization (SEO) Specialist Job Description

What Is the Technical SEO Checklist for 2025?

Optimizing a website means implementing various overwhelming tasks and tactics. It is a continuous process that demands proficiency and focus. Here is a technical checklist for SEO methods to provide a seamless user experience and boost a website’s search engine rankings.

- Make Your Website Mobile-friendly

- Secure It with HTTPS

- Work on Core Web Vitals

- Improve Page Loading Speed

- Optimize XML Sitemap

- Check for Duplicate Content

- Add Structured Data and Schema

- Identify Crawl Errors

- Optimize Robot.txt file

- Build a User-friendly Architecture

- Improve Navigation with Breadcrumbs

1. Make Your Website Mobile-friendly

Many people are already talking about mobile optimization, and businesses are focusing on on-page checklists and elements to attract more customers. However, many websites have still not embraced a mobile-first experience.

Being responsive means that a website’s content and other elements must be the same on desktop and mobile screens. Additionally, there must not be unnecessary pop-ups that hamper user experience. Other aspects of responsive website design include clickable buttons and links, adjustable images and texts, and sufficient whitespace.

In 2018, Google introduced an algorithm that prioritized a mobile-first approach. This means that the search engine will also consider the responsiveness of websites on mobile devices, including tablets and smartphones, to evaluate web pages for search results.

As mobile devices account for more than 53% of the market, Google prioritizes mobile-friendly websites over those that aren’t. Such sites offer a better user experience, allowing users to access pages and services from anywhere using their mobile phones. So, it’s important that websites automatically adjust their layout and content according to different devices and screen sizes.

2. Secure It with HTTPS

Security is a primary factor that users and search engines consider while visiting a site. People prefer secure websites so they can explore and interact with them more freely and for longer. Hence, it is essential that websites are secured using HTTPS.

Earlier, e-commerce websites primarily relied on SSL to ensure secure online transactions. It was an essential part of an ecommerce technical SEO checklist for businesses. In 2014, Google made it mandatory for all websites to incorporate SSL to get a higher organic ranking in search results, which marked a significant shift. In 2018, the search engine started flagging websites that were not secure, highlighting the importance of HTTPS and encouraging businesses to adopt it. This was a crucial step to prevent increasing cybercrimes and sending users to safe domains.

HTTPS, or HyperText Transfer Protocol Secure, checks that the data exchanged between users and a website is encrypted so cybercriminals can’t read it. This ensures the privacy of users and their data. On the other hand, an SSL (Secure Sockets Layer) certificate states that a site is secure, gaining trust from search engines. To get HTTPS in a website URL, you need an SSL certificate.

Soon, website owners started making a shift by installing an SSL certificate, which establishes a secure and encrypted connection. The lock icon before the URL of a web page shows that it is secure.

3. Work on Core Web Vitals

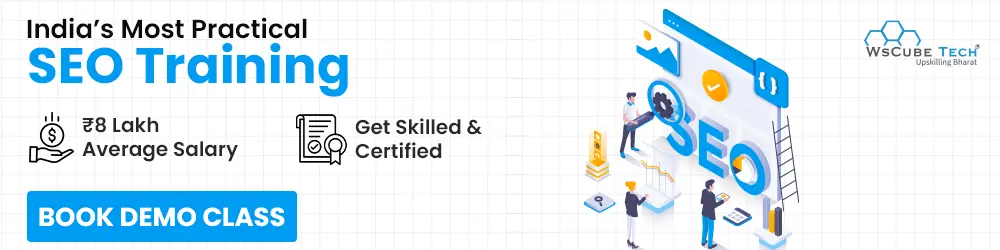

Core web vitals are impotant metrics used to evaluate a website’s user experience and affect its search engine rankings. There are three primary metrics included in technical SEO:

Largest Contentful Paint (LCP)- It is used to measure the time taken to load the biggest element on a web page. For the best user experience, th biggest element must load within 2.5 seconds.

Interaction to Next Paint (INP)- This metric assesses resposnsiveness of the user interface. Earlier, it was First Input Delay (FID) but INP relaced it as a core web vital in March 2024. A good INP is <=200ms.

Cumulative Layout Shift (CLS)- It assesses the visual stability of on-page elements. A website must aim to maintain a CLS of less than .1 seconds.

Using Google Search Console, you can measure these metrics and see the URLs that have issues. You can use varied tools to improve core web vitals and speed up loading time. A few things you can work on to improve page speed are image formats for the browser, lazy-loading for non-critical images, and JavaScript performance.

Also Read: SEO Salary in India in 2025 (For SEO Freshers, SEO Executives, and Managers)

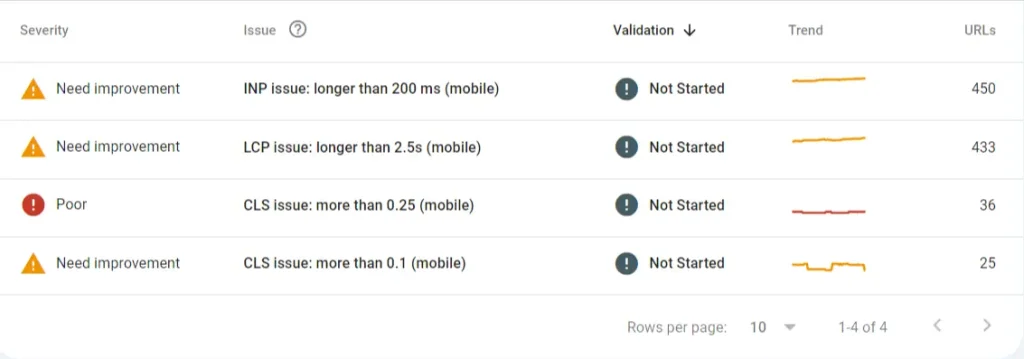

4. Improve Page Loading Speed

Although Google has always emphasized desktop site speed as a key ranking factor, in 2018, it also started prioritizing mobile site loading speed. Now, it is a crucial ranking factor. So, the faster a page loads, the more likely it will appear in SERPs.

The human attention span is around 8 seconds, and you have to get their attention within this time to engage them with your content. A slow-loading site can result in people losing interest, which leads to higher bounce rates and lower user satisfaction.

You can use Google’s PageSpeed Insights tool to evaluate your site’s performance and loading speed. To optimize its speed, you can consider the following tactics:

- Minimize image size

- Optimize landing page redirects

- Including responsive images in vector formats

- Remove unnecessary plug-ins

- Compress large images

- Get better hosting services

- Use browser caching

5. Optimize XML Sitemap

An XML sitemap is a blueprint of a website that enables search engines to understand its structure, making it easier to find, crawl, and index its content more efficiently. Many website owners overlook an XML sitemap, a vital element of any technical SEO strategy. However, if executed correctly, it can prove to be a powerful and valuable resource.

To allow search engine bots to navigate your page, you must include crucial information, such as last modified dates, the relationship between important web pages of a website, URLs, and priority levels, in an XML format. XML guides crawlers by outlining a website’s structure.

As you create a sitemap for your website using a sitemap generator, make sure to submit it to the Google Search Console so it can crawl and index your site properly. Remove any unnecessary redirects, pages with minimal SEO, and blocked URLs to make it more effective and avoid any hassle. Also, don’t include lengthy redirects for better optimization.

Interview Questions for You to Prepare for Jobs

| Digital Marketing Interview Questions | SEO Interview Questions |

| Email Marketing Interview Questions | Content Writing Interview Questions |

6. Check for Duplicate Content

As the name suggests, duplicate content refers to two or more identical or similar content with different URLs. This can be on either two distinct websites or the same site. Duplicate content can attack any website and affect rankings, especially if it’s an e-commerce platform selling similar products.

Search engines never show duplicate content on search engine results pages. Even if your content is exceptional and useful, it will not find its place in SERPs.

So, the best way to fix the issue of duplicate content on your website is to redirect 301 duplicate pages to the original one. You can also use the canonical tag to deal with this problem. A canonical tag tells search engines which is the original content so they can index it.

There is also an option to delete duplicate pages, but make sure to redirect their URLs to the original page.

7. Add Structured Data and Schema

You must structure your website properly so that search engines and crawlers can understand it easily. As bots and humans don’t speak the language that a web oage is written in, you must consider them while creating a website. Hence comes schema markup into play.

Schema markup is the language of search engines, allowing them to understand the content of a website and providing a seamless user experience. It includes a collection of standardized tags that you can place on a website to define its elements in robot speak. When you implement schema markup, it enables search engines to offer more rich results to users on SERPs.

Structured data is used to provide information about a web page and its content, telling Google the context of the page and what it offers to users. Hence, Google can show it in organic results in SERPs. Structured data includes tables and lists along with technical schema markup. As it provides more information about a web page, it improves CTR.

Companies use schema markup to associate their brands with entities in their field, niche, or industry. When a user searches for a query related to a particular brand, it will likely appear in search results.

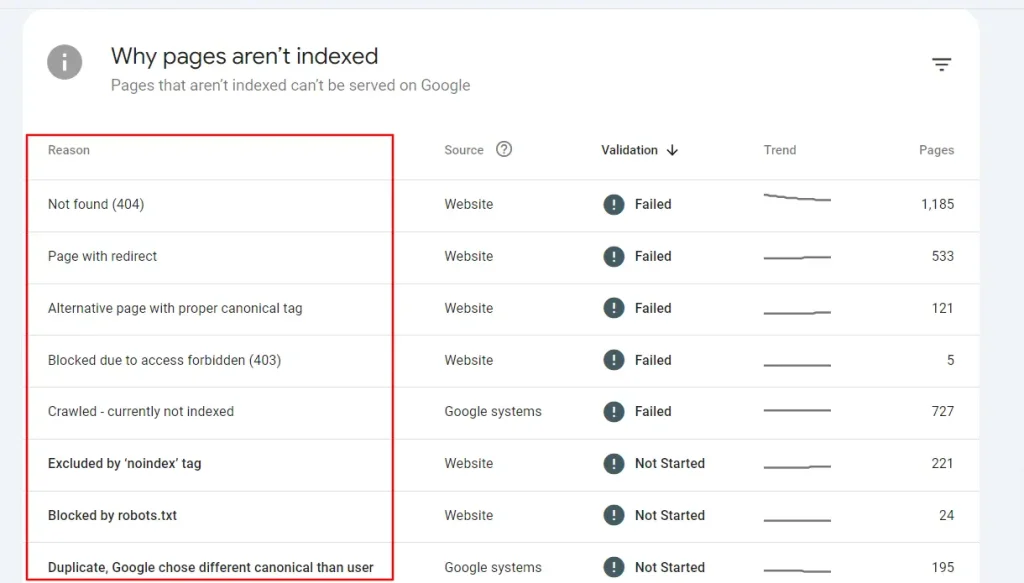

8. Identify Crawl Errors

Next in our technical SEO checklist is finding crawl errors. Crawling refers to following links from one page to another. Search engines crawl your website’s pages to know its purpose and how it provides solutions to users’ search queries. Hence, it’s important to build internal links for an effective SEO strategy, enabling search engines to navigate your website and understand the hierarchy of pages.

To maintain a well-performing and seamless website, you need to create effective backlinks and check there are no crawl errors. Two common examples of crawl errors are:

- Broken links are common on websites. Therefore, you need to continuously monitor your site for broken links and create redirects.

- If not handled correctly, redirects can harm your website rather than benefit it. With redirect chains, you can redirect leads to another site, then another, and so on.

So, check your site to find and fix crawl errors and improve your chances of higher rankings. You can use Google Search Console’s coverage report, which highlights pages that Google can’t crawl. Filter the most urgent issues, fix broken links, update the robot.txt file, and keep performing regular audits for crawl errors using any tool.

Also Read: Top SEO Project Ideas & Topics for 2025 (Beginners To Advanced)

9. Optimize Robot.txt File

There can be various pages on a website that you would not want search engines to crawl and waste their time on. These include cart and checkout pages, admin pages, resources, and login pages.

A robot.txt file tells search engines which pages of your website are accessible for search engine crawlers and can be displayed in SERPs. Optimizing a robot.txt file prevents unnecessary crawling of irrelevant pages and makes important pages easily accessible.

10. Build a User-friendly Architecture

The architecture of a website means how its pages are grouped, organized, and linked. If done well, a site architecture can help users navigate the website seamlessly and find the information they are searching for.

As they can find what they seek without hassle, it will engage them longer. This will also increase the time they spend on the website, telling search engines that it provides valuable content. Also, it will offer a positive UX to users, bringing more traffic.

Below, we have listed a few factors that affect the architecture of a website:

- URL structure

- Navigation menu

- Internal linking

- categorization

11. Improve Navigation

Breadcrumb menus, or breadcrumb trails, allow users to navigate a website through a visual representation of their location on a website. It is a critical factor in optimizing a site that improves user orientation by indicating the user’s current position and displaying a site’s hierarchy.

Moreover, breadcrumb menus reduce the number of steps users take to return to the homepage or a specific section. This is a beneficial feature for websites with diverse sections and pages that need a logical structure to engage users and improve their experience.

FAQs Related to Technical SEO Checklist

Technical SEO includes optimizing technical elements of a website and server that affect its overall performance and user experience. There are two key elements that influence how a user and search engine find a website- crawlability and indexibility.

However, no technical SEO includes various processes that enhance a website’s search visibility. These are site speed, user experience, site architecture, security, XML sitemaps, and duplicate content.

Technical SEO directly affects a website’s performance on search result pages. Also, it ensures that it is easy to navigate and free from any technical glitches or errors that can hurt its ranking on search engines.

Basically, technical SEO makes a website crawlable because if search engines can’t understand a website and its content, they will not show it in SERPs. This will result in losing out on many opportunities, such as website traffic, sales, leads, and revenue. Technical SEO helps websites attract organic traffic and turn visitors into paying buyers.

Google has also announced that it considers various technical factors, such as mobile-friendliness, security, page speed, and more, to assess a website’s quality and decide its search ranking.

Here are a few useful technical SEO tools you can use:

– Google Search Console

– Screaming Frog

– Semrush

– Ahrefs

– Google Analytics

– WebPageTest

– Web Developer Toolbar

– WebPageTest

Core web vitals include three metrics that Google uses to measure a website’s user experience. These metrics are:

Largest Contentful Paint (LCP) evaluates the loading speed of a web page’s biggest element.

Interaction to Next Paint (INP) measures the responsiveness of the user interface.

Cumulative Layout Shift (CLS) measures the visual stability of a web page.

A few common factors that can result in crawl errors are broken links and redirect chains. Redirect chains is a situation where a link redirects a user to another link, then another, and another, and so on.

There are three types of SEO checklists:

Shared document, which includes using a spreadsheet to track SEO progress

Real-time checklist where you can see updates and respond to them in real time.

AI-powered checklist where you can dynamically generate a checklist to manage your SEO.

Conclusion

Technical SEO is an indispensable part of any SEO campaign. It optimizes a website’s structure, which improves search engine crawling and indexing. We hope that this technical SEO checklist 2025 will come in handy as you get started and will improve your chances of ranking higher in SERPs.

Learn SEO from industry leaders and experts with our SEO course which focuses on practical learning and hands-on experience. We offer live interactive classes to make your learning more engaging and effective. Connect with us now to register for the demo class.

Read more blogs: